Award-Winning Debugging Tool Integrates AI to Diagnose and Resolve Software Issues

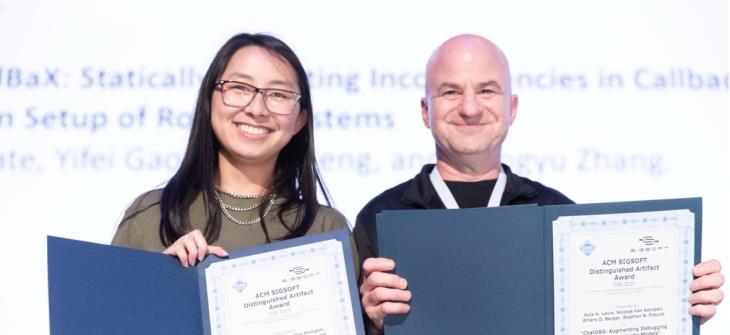

CICS PhD students Kyla Levin and Nicolas van Kempen, along with Professor Emery Berger and Professor Stephen Freund (Williams College), receive the 2025 FSE Distinguished Artifact Award for ChatDBG

Content

An AI-enhanced debugging tool developed by computer science researchers from UMass Amherst and Williams College has earned a 2025 ACM International Conference on the Foundations of Software Engineering (FSE) Distinguished Artifact Award. The award honors outstanding software, data, and supplemental materials that significantly enhance reproducibility, verification, and future research.

The tool, ChatDBG, was developed by Manning College of Information and Computer Sciences (CICS) PhD students Kyla Levin and Nicolas van Kempen, along with Professor Emery Berger and Williams College Professor Stephen Freund.

Debugging is the process of identifying, analyzing, and fixing errors or unexpected behavior in software, and typically involves running a program, observing where and how it fails, and then examining the underlying code to find and resolve the issue, helping ensure software functions correctly and reliably. In their research paper, "ChatDBG: Augmenting Debugging with Large Language Models," the team details how ChatDBG enhances the debugging process by integrating large language models (LLMs) into a conversational interface, allowing programmers to pose complex queries about their programs, analyze program states in real-time, and efficiently diagnose and resolve software issues. Compatible with widely used debuggers such as LLDB, GDB, and Python's Pdb, ChatDBG allows the LLM to autonomously query and control the debugger, navigate through program stacks, and leverage domain-specific reasoning to pinpoint critical software bugs.

"Debuggers can be really amazing tools, but they're difficult to master, especially for beginners. That's why a lot of developers will still forego a proper debugger in favor of old-fashioned print-debugging. What most developers really want is a tool that can do all of the tedious investigative work for them—something that can dig through the code, find out what's gone wrong, and suggest a fix all on its own. That's where ChatDBG comes in," says Levin. "By allowing the LLM to drive the process and take advantage of the debugger's deep knowledge about the program, ChatDBG upgrades an ordinary debugger into a much more intelligent and proactive assistant."

Extensive evaluations across diverse software environments, including native code and Python scripts, have validated the effectiveness of ChatDBG. To date, it has successfully identified actionable bug fixes with just one query in 67% of Python cases, a figure rising to 85% with one additional follow-up question. The tool has amassed over 85,000 downloads since its release.

"We've been thrilled by the response to ChatDBG, and the excitement around it goes to show how many developers relate to this problem,” says Levin. "There are always opportunities to expand ChatDBG with other traditional debugging and static analysis techniques, and we're excited to see where the work goes next!"